In a comment to my recent post “Are There Putting Patterns?” ishmail66 speculates that my APBA average putting scores (1.57/hole) may be slightly lower than those of real-life pros from the past couple of seasons (1.61/hole), because my dataset probably has quite a few All-Time Greats (ATG) included in it. He’s not wrong. The dataset I used contained putting results for 18,450 holes, of which 4,896 holes were played using ATG golfers (26.5%).

That got me to wondering: Do ATG golfers putt better, on average, than non-ATG golfers? To answer this question, you need to know a little more about my dataset. In addition to all 144 ATG players, the dataset consists mainly of golfers from the 2015, 2016, 2019, 2020, and 2021 seasons plus the original “1962” card set (which I’m told is an amalgamation of season performances around that time rolled into one). The dataset also includes putting data for nine golfers from a mix of other APBA card seasons.

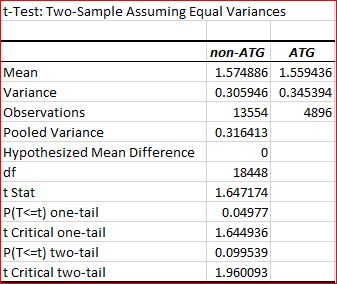

To test my hypothesis, I divided the dataset into two groups, ATG and non-ATG, and ran a t-Test on the number of putts per hole. Here are the results.

What the results mean

ATG golfers, on average, do take fewer putts per hole (1.56) than non-ATG golfers (1.57). Because of the large sample sizes, this result happens to be borderline statistically significant (p < .05). But it assumes that there is no reason to believe the non-ATG golfers would putt better than or the same as the ATG golfers.

Allow me to clarify. I was testing whether ATG golfers putt better, as was implied by ishmail66’s comment. The null hypothesis–that ATG golfers don’t putt better–was rejected (barely) using a one-tailed t-Test. In other words, there is less than a 5% probability that the lower putting average for the ATG golfers is due to chance.

However, if the question had been asked a different way, I would have gotten a different answer! (Such is the realm of statistics.) If I had asked: “Is there any difference between the putting averages of ATG and non-ATG golfers in either direction?” the answer would have been probably not (p < .10) using a two-tailed t-Test.

What should we make of this? At least two things:

(1) If we have some reason to believe (independent of these findings) that there should be no difference between how ATG golfers putt and “ordinary” pros (mainly from the past 6 years), we can say we have a 90% chance of being right. If we have some reason to believe that non-ATG golfers should putt better than ATG golfers, we are almost certainly wrong. However, if we have reason to believe (independent of these findings) that ATG golfers putt better than today’s “ordinary” pros, we can say we have a 95% chance of being right.

(2) The second point is that the difference in putting performance is pretty small. It may not matter from a practical standpoint. One one-hundredth of a stroke per hole may be statistically significant given the large sample sizes, but nobody ever won or lost a round of golf by eighteen hundredths of a stroke.

To be fair, I did some rounding here to make this post a little more readable. The actual difference between the two groups is closer to 0.01545 strokes/hole. Over the course of a tournament, that would give the ATG golfer a 1.1124 stroke advantage.